Don't trust people who don't use Claude Code

For they have not seen the future

The internet lit up this past week when Matt Shumer published his essay “Something Big is Happening.” In it, he describes something that many coders working with AI have felt in recent months. The ground beneath us is shaking. Recent advances in AI models and tooling are redefining our profession and soon these productive capabilities will be felt by the wider economy. He likens this moment to shortly before the world shut down for Covid 19 — some people understood the world was about to change, but most had no idea what was coming.

Reactions to his piece were polarized. On one side were people who have actually used the tools he writes about and broadly shared his sentiment. On the other side, critics who haven’t used the tools decried the piece as propaganda from the AI industry to hype up its vaporware that amounts to little more than a novel form of Google search. Rather than engage with Shumer’s claims, these critics would denounce AI as “not actually reasoning” or “fundamentally incapable of producing something new.”

Reasonable people can disagree about whether Shumer is overly alarmist in response to AI progress or whether recent progress represents true exponential improvement which inevitably results in “AGI”. Personally, I don’t anticipate that LLMs will break the conventional paradigm of technological progress where new tools make people more productive rather than replacing them outright.

But to disparage his description of recent advances by claiming that AI can’t actually think is like quibbling about whether a car with a 350 horse-power engine can actually gallop like 350 horses. If you’ve never seen a car before, this might seem like a worthwhile topic of discussion. If you’ve driven down the highway at 70mph, however, you understand that it’s so beside the point that it’s frankly not worth discussing.

The only reasonable conclusion I can draw about this form of criticism is that these AI critics have not driven down the highway. They have not used Claude Code or OpenAI Codex themselves and are unaware of what they’re capable of. I too would be skeptical of Shumer’s claims if I hadn’t used the tools he writes about!

So rather than argue with these critics in the abstract or accuse them of being irresponsible for weighing in on an important subject without having approached it with enough curiosity to actually use the tools they opine on, I will just show them.

Here are three concrete tasks I’ve accomplished with AI tools in the past month that have changed the way I work.

AI wrote me a financial report that I couldn’t have written myself

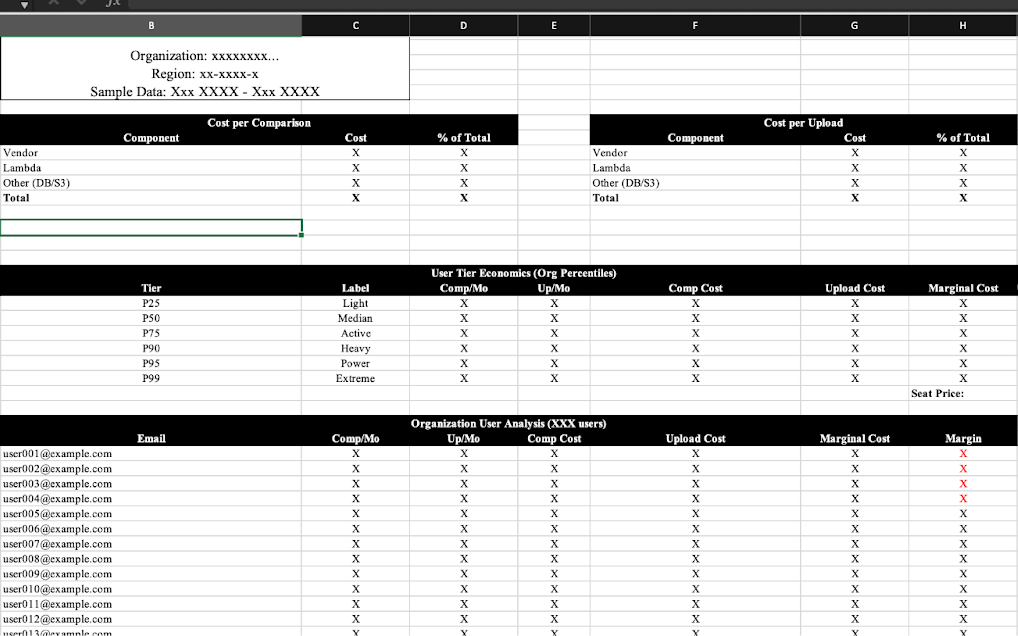

My startup, Version Story, sells software to law firms. While modeling our finances this past week, my cofounder Kevin needed to understand the marginal cost of expanding one of our existing accounts with new users.

This is a complex, multi-variable problem. Many of our Amazon Web Services (AWS) costs are fixed and amortize with scale. Some are step-function costs that amortize until hitting a critical inflection point, at which point we need to scale up. Many are variable and impossible to calculate bottom-up so we can understand “one redline costs us X in AWS spend.”

I did not know how to approach this problem. So I asked Claude Code to help me write a script that could do it for me.

I gave it read access to an Amazon Web Services tool to fetch our billing data from the past quarter, and access to the Google BigQuery tool to read from our raw analytics data.

First, it analyzed all of our spend categories and quickly identified the fixed costs. For each variable cost category, it ran a linear regression analysis on-the-fly to correlate our spend to usage increases across the actions like file uploads, comparing documents, etc. It then wrote out its calculated cost/action estimates in a JSON file (a file used for storing data) that the script would use.

The structure of this file looked like the following:

Cost per file upload:

Total cost: $NBreakdown:Cost for AWS Service 1: $XNotes: “Each file upload triggers Service 1 five times. Given the unit costs of the service…”

Cost for AWS Service 2: $YCost for AWS Service 3: $Z

It broke down the cost of each of our key actions by estimating its cost across different AWS services. For each service, it describes how it arrived at this figure so that future Claude Code sessions could recalculate the figure if our infrastructure changes.

Claude Code used Google BigQuery data to script usage patterns for a customer over the past three months. It models a distribution of their usage data to project expected usage for the next 100 users, then projects expansion costs using the calculated cost assumptions from the file above.

The report includes a summary of our costs and margin based on the customer’s previous usage, with a granular analysis of per-user cost.

I don’t know how I’d have performed this analysis a year ago. My results would have certainly lacked the granularity and precision of this report. What would have taken most of a day, Claude Code completed in about an hour.

AI automated the worst part of my job

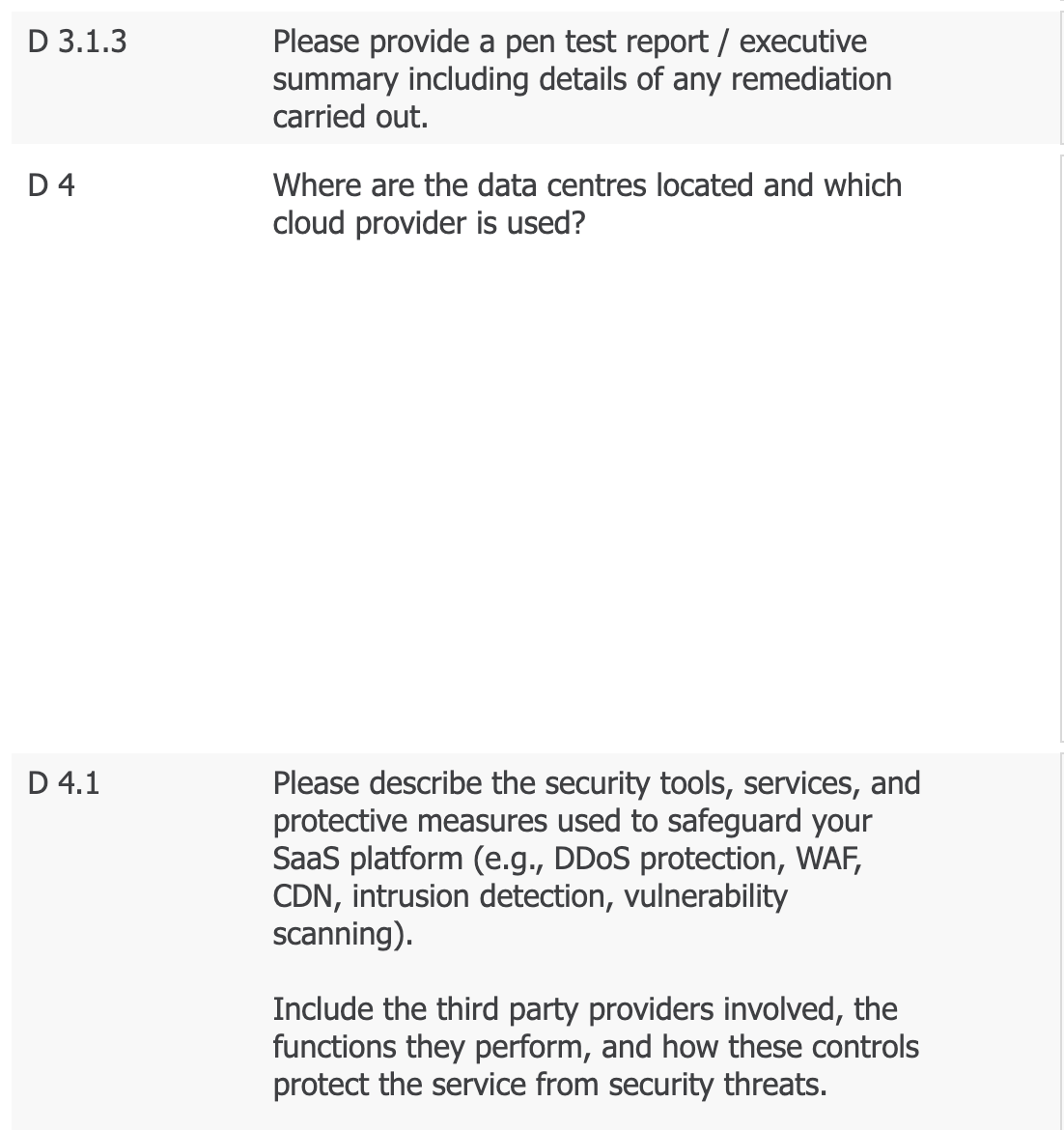

Every time we close a new deal with a large law firm, we need to fill out a compliance form. These forms ask about our security posture, certifications, data-access policies, etc.

The forms aren’t hard. We maintain good security practices and are SOC-II compliant. They’re just remarkably tedious. Every form asks similar questions with slight variations. Each one takes hours of digging through precedent forms, looking for similar questions, copying and rewording answers to fit, or starting from scratch when you can’t find a good match. We used to hire contractors to fill out these forms.

With Claude Code, my cofounder built a system to automate them.

The core idea is a “knowledge base” — a single file that serves as our source of truth for every security question we’ve ever been asked. Each entry contains a canonical version of the question, our official answer, and references to the specific compliance frameworks and vendor forms where that question appears.

The system works in three phases. First, Claude Code extracts every question from a completed questionnaire, capturing the exact structure of the form including question text, answer options, field identifiers. Second, it maps each question against the knowledge base to find equivalent questions we’ve already answered. Third, it fills in the new form automatically, color-coding each answer by confidence: green for direct knowledge base matches, yellow for answers carried over from similar previous questions, and red for questions that need manual review.

The first questionnaire we ran through the system took real effort. We had to build the knowledge base from scratch, confirm the mappings, and review every answer. But the second form we received had about 80% overlap with questions we’d already answered. By the third, we were close to 90%.

Each hour we spend answering one vendor’s questions now makes every future questionnaire faster. A task that used to take several hours of tedious copying, pasting, and rewording across old forms and policy documents is now mostly automated. We review the flagged items, make a few edits, and submit.

AI built me a custom tool I’d pay $100/month for

I spend a lot of time solving engineering problems related to Microsoft Word files. Our platform produces “redlines,” a type of Word file that shows the changes made to a document.

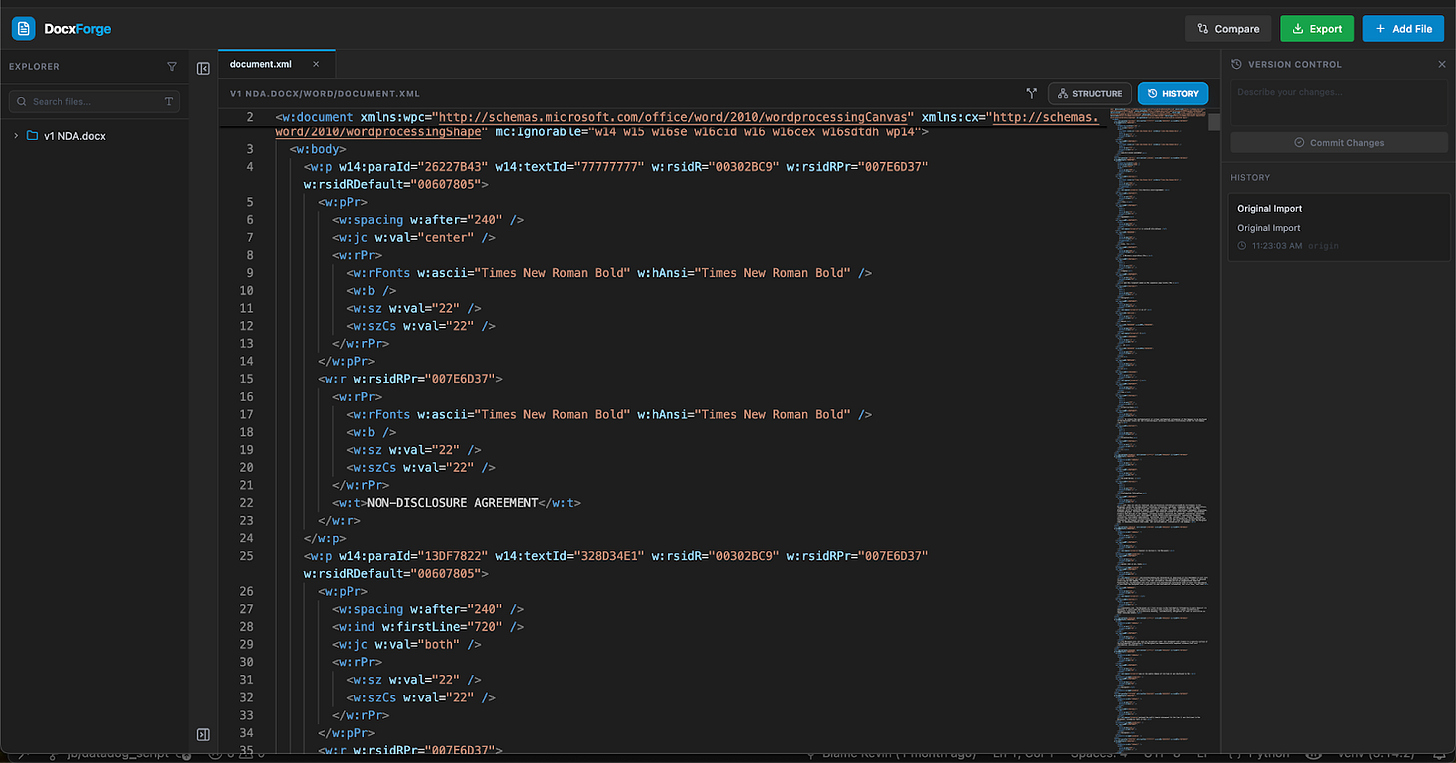

Docx, Word’s file format, is difficult to work with. It’s poorly documented and minor discrepancies in its underlying structure can cause a file to fail to open entirely.

Solving these problems is hard. It requires surgically changing minor details in the underlying structure of the document until you identify the cause.

Existing tooling to solve problems like this is limited.

My workflow looked like this: unzip the docx to extract its raw XML, open the files in my code editor, make changes, rezip it, test if it opens in Word, and repeat. All while keeping track of each hypothesis in separate files and reminding myself not to overwrite the original.

With Google AI Studio (a platform for agentic AI coding like Claude Code), I built a tool customized to my exact workflow. It opens docx files in the format I need, lets me make changes and quickly test if they solve the problem, tracks every modification I’ve attempted with version control, and has built-in diffing to compare document structures across files.

It would have taken months to build something like this without AI. If it was a product on the market, I’d have easily paid $50-$100/month for it a year ago.

But instead, I built it in a little over an hour without looking at a single line of code.

Automated coding is a very big deal

Some people are skeptical that AI will have much of an impact outside of coding. Despite the dubious implication that automated coding will only affect the software engineering profession, I deliberately chose examples that could apply to other professions.

The outputs of these exercises pertain to software startups, but the contours of the problems are general. Analyze data from multiple sources to create a report. Find precedent questionnaires to fill out a compliance form. Build a tool for a workflow that the market doesn’t have a solution for.

Many, many roles in the economy deal with problems like these. And that’s before you consider the impact on coding itself, which is already enormous.

Coding projects that would have taken days a year ago now take hours. With the latest models, I can often one-shot a full feature end-to-end in a single prompt. If we have a bug, Claude Code can usually fix it with just the error logs. I’ve worked through many tasks in recent months that sat on the backlog for years because they weren’t quite worth the time. Now they hardly take any time at all.

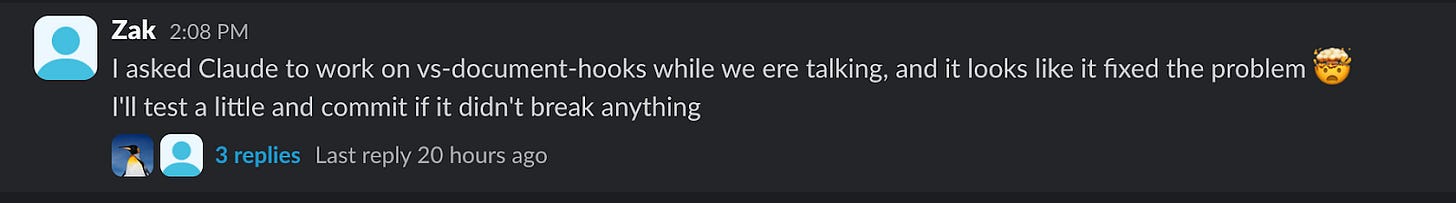

One of our engineers, Zak, has historically been skeptical of AI-coding. Before the tools had matured (i.e. four months ago), he usually felt that it took more effort to baby-sit the AI than to just write the code himself.

Yesterday, he was working through a particularly thorny problem in our document rendering system. After mentioning on a call that it would require a significant deep-dive to solve, he messaged this to the team.

A year ago, I daydreamed about what our team could accomplish with five more engineers. Now it’s difficult to imagine what we’d even have them work on.

But is it intelligent?

Now look, if you want to debate whether Claude Code doing my work for me in a fraction of the time satisfies your capital-T, True definition of “intelligence”, by all means, go for it. No true Scotsman, right?

If you don’t think all this AI stuff is that impressive, you’re entitled to that opinion. Although if the automation of coding in only a few years, the poster child of what has been the most important skill in the digital age, doesn’t impress you, I’d love to know what does.

But when considering whether these tools have the power to be economically transformational, debates like these are beside the point. The tools work. Now. In a real tangible sense that eludes philosophical pontification.

This is the tip of the iceberg. Diffusing new technology through the economy takes a while, and I still know software engineers who don’t use Claude Code. As evidenced by recent debates, even pundits purporting to speak with authority on AI haven’t used these tools!

In time, however, that will change and professionals throughout the economy will have access to capabilities that will make their heads spin. In light of that, I believe it is worthwhile to spread the message that, yes, AI actually is quite a big deal. People should pay attention to and experiment with AI instead of writing it off as vaporware.

I know critics will respond by accusing me of being an insider shilling VC propaganda. “See! He just wants you to use AI!” For the record, I am not affiliated with or invested in any AI company, nor is my startup Version Story an “AI” company. I have no financial stake in your AI-skepticism. I have simply experienced first-hand what AI is capable of.

But the good news is you don’t need to trust me! You don’t need to trust the skeptics either. You can try it yourself.

It’s not that hard. Set aside 30 minutes and find a decent YouTube tutorial to learn how to use the terminal and Claude Code. Build that app idea that you and your college roommate talked about but never built because you didn’t know how to code. Take a stab at automating that tedious task at your job that you hate so much.

You can just do it. No one’s stopping you. And when it works, I think you’ll begin to see what all the hype is about.